Whispp’s approach: Real-time Assistive Voice Technology

Introduction

Globally, 500 million individuals are affected by some form of speech impairment. Losing one’s ability to speak has deep and extensive impacts on a person’s life. Communication, a basic element of human interaction, turns into a daily struggle, resulting in social isolation and frustration as people find it difficult to express their thoughts, feelings, and needs to others. Professionally, careers might be at risk as many jobs depend on effective oral communication. This can cause economic hardship and a reduction in independence. Additionally, reduced vocal capabilities can undermine self-confidence, leading to feelings of insufficiency and depression.

There is a broad range of speech disabilities, each presenting different challenges in speaking fluently, effortlessly, and clearly. Thus, to more effectively comprehend and classify the various types of assistive voice technologies, it is crucial to first gain a deeper understanding of the concept of speech production.

Communication, speaking, and self-expression are fundamental human rights. Yet various impairments can obstruct this ability, leading to numerous communication difficulties. From Augmented and Alternative Communication (AAC) devices to the newest real-time assistive voice technologies, progress in this field enables communication for individuals with speech-related disabilities. The world of assistive technologies is broad and diverse, addressing multiple impairments and challenges. Nonetheless, within this context, the role of real-time assistive voice technology is distinct.

Figure 1. The CEO of Whispp arranges an interview with Dolf, a person who experiences communications challenges. The goal of the interview is to gather insights that can guide the development of more effective assistive voice technologies.

Figure 1. The CEO of Whispp arranges an interview with Dolf, a person who experiences communications challenges. The goal of the interview is to gather insights that can guide the development of more effective assistive voice technologies.

Understanding Whispp through the Source-Filter Model

The spectrum of speech impairments includes a variety of conditions that impact an individual’s capacity to produce clear and effective speech. These impairments range from articulation disorders, where certain sounds are difficult to articulate, to fluency disorders, such as stuttering, which interrupt the rhythm of speech. Voice disorders influence the pitch, loudness, or timbre of the voice, while motor speech disorders like dysarthria and apraxia involve challenges related to muscle control or neurological problems.

A fundamental framework for explaining speech generation is the “source-filter model”. This model provides insights into how the vibrations from the vocal cords (the sound source) are altered and shaped by the structure and movements of the vocal tract (the filter) to produce clear and intelligible speech.

The Source-Filter Model of speech production is essential for understanding how humans produce speech sounds. It breaks down speech production into two primary components: the “source” and the “filter.” Let’s examine how this model elucidates voice quality and articulation, and then explore how it can be applied to assist individuals using Augmented Alternative Communication (AAC) tools and speech-to-text technologies.

The Source: The origin of speech primarily involves air expelled from the lungs, which causes the vocal cords within the larynx to vibrate. This vibration generates a fundamental frequency, perceived as the pitch of the voice. Variations in the nature and intensity of these vibrations (e.g., loud or soft, high or low pitch) contribute to the quality of the voice.

The Filter: The filter consists of the articulatory movements within the vocal tract, including actions of the tongue, lips, and palate. Articulation disorders arise when these movements are imprecise, as observed in conditions like apraxia or due to structural anomalies. Dysarthria, marked by weakened muscle control, affects the filtering mechanism, leading to slurred or slow speech that may be challenging to comprehend.

“Bringing down the latency of AI conversions is my top priority. Our AI technology is so unique because it works in real-time. We make this happen with a lot of R&D in audio-to-audio processing.” – Tatsu Matsushima, AI Researcher at Whispp

Supportive communication technologies & categories

For individuals who face challenges in both the source and filter components of speech—such as those with impaired articulation or compromised voice quality—Augmented Alternative Communication (AAC) tools offer substantial assistance. AAC devices span from basic picture boards to advanced electronic systems that produce speech, enabling individuals to communicate effectively despite their impairments. These tools play a crucial role in bridging communication gaps and enhancing the quality of life for those with speech disabilities.

Supportive communication technologies play a vital role in improving communication for individuals with speech impairments. Various types of these technologies include Text-to-Speech (TTS) devices that convert typed text into synthetic speech, allowing those who can utilize manual input or eye-tracking technologies to communicate effectively. Speech-Generating Devices (SGDs) are equipped with both pre-programmed and customizable phrases to aid in daily communication and social interactions. Voice Output Communication Aids use both digitized pre-recorded human voices and synthesized speech, enabling users to express themselves via touchscreens and buttons. These tools significantly enhance the ability of individuals with speech disabilities to interact and engage with the world around them.

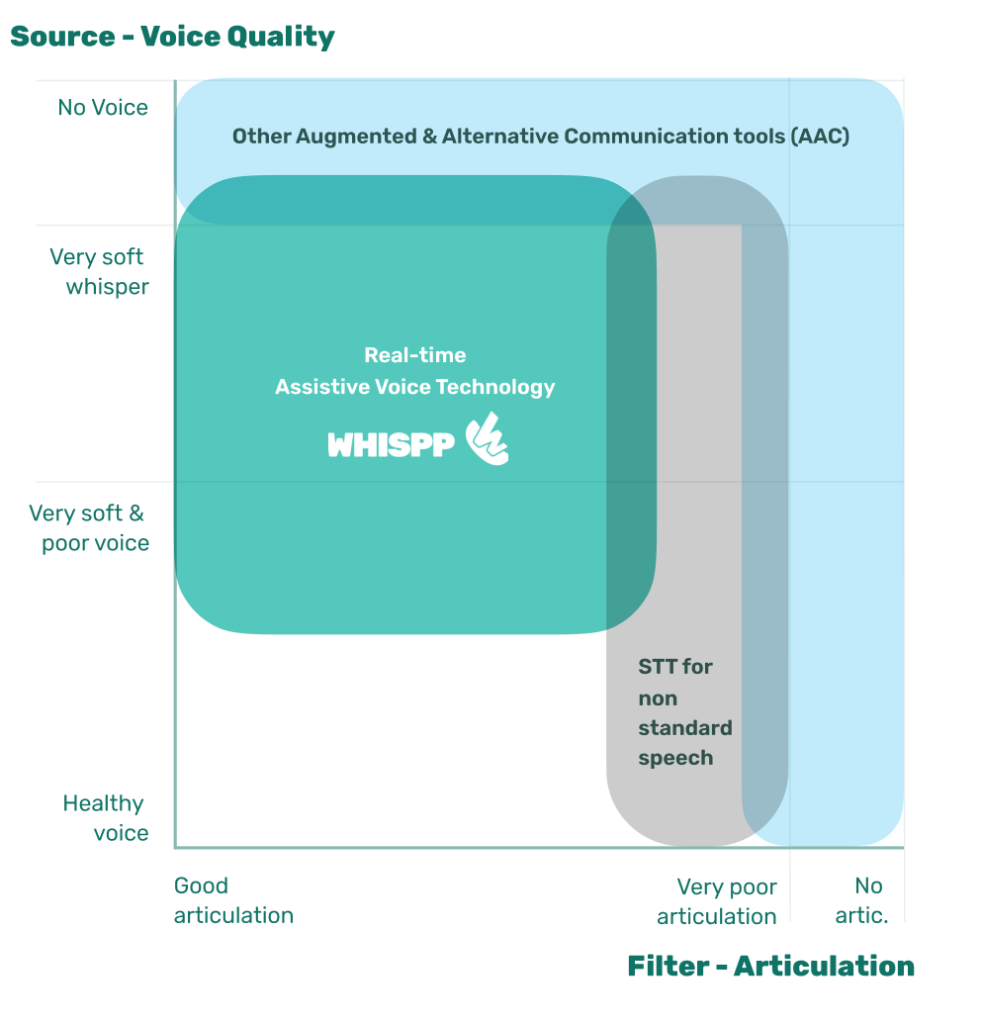

Using the Source-Filter Model discussed earlier, we can classify assistive speech technology based on a combination of the user’s capability to produce sufficient sound for intelligible speech and their ability to articulate clearly:

- Automatic Speech Recognition (ASR) for Non-Standard Speech: This technology is suited for individuals who can produce enough sound but have poor articulation due to conditions that impair articulation, such as ALS (Amyotrophic Lateral Sclerosis), MS (Multiple Sclerosis), stroke, and Parkinson’s Disease. ASR systems are tailored to recognize and process speech patterns affected by these disorders.

- Other Augmentative Alternative Communication (AAC) Technologies and Tools: These are designed for individuals who cannot produce any speech sound and/or have articulation that is too impaired to be recognized by ASR systems. This category is particularly relevant for conditions like locked-in syndrome or severe combinations of the disorders mentioned earlier.

- Real-Time Assistive Voice Technology (RAVT): This technology is intended for individuals who are unable to generate adequate sound but still have intelligible articulation. Conditions such as laryngeal cancer, vocal cord paralysis, and benign voice disorders like vocal cord polyps and cysts may require the use of RAVT to facilitate communication.

These classifications help determine the most appropriate type of technology to support individuals with varying speech impairments, ensuring they can communicate effectively despite their challenges.

Figure 2. Categorization of assistive speech technology using the Source-Filter Model

Big tech and assistive tech companies primarily concentrate on Automatic Speech Recognition (ASR) and Augmentative Alternative Communication (AAC) technologies. Both ASR and AAC solutions depend on generating text, which is subsequently synthesized by a computer or device. Although these technologies are highly beneficial for their target groups, they often include delays of about 2 to 3 seconds. This latency can hinder the flow of natural conversation, creating barriers to fluid communication. Consequently, despite their advantages, these technologies may not provide a fully adequate solution for many individuals, as the delays can disrupt the spontaneity and interactivity of everyday interactions.

Whispp’s solution: Real-time Assistive Voice Technology

Drawing from my personal experience with a stuttering disability, I deeply understand the challenges, emotions, and realities associated with speech disabilities. I recognize that the delays inherent in current technologies are insufficient for seamless communication. Consider the impracticality of a 2-3 second delay during a phone call or an in-person conversation—would such a setup be functional or desirable? – Says Joris Castermans.

Current advancements in the field predominantly focus on speech-to-text (STT) technologies. While beneficial for individuals with reduced articulation due to conditions like ALS, MS, stroke, and Parkinson’s Disease, the significant latency of these systems disrupts the natural flow of conversation, making them unsuitable for those who need real-time interaction.

In response to this, Real-Time Assistive Voice Technology (RAVT) represents a pivotal shift. Unlike traditional systems that rely on speech-to-text conversion, RAVT employs an audio-to-audio approach based on AI. This method allows for immediate, scalable, and language-independent conversion, offering a more dynamic and practical solution for those with voice disorders who retain clear articulation but cannot produce sound. This innovative approach could significantly enhance communication for those with speech impairments, fostering more natural and engaging interactions.

Real-time assistive voice technology that incorporates whisper-to-speech capabilities offers a transformative solution for those with compromised or nearly lost voice capabilities, addressing a significant need in the current landscape of assistive technologies. This approach is groundbreaking because it leverages the therapeutic benefits of whispering, which can be particularly advantageous for certain speech disorders resulting from neurological changes. For instance, individuals who experience severe stuttering can reduce their stuttering frequency by an average of 85 percent when they whisper. Similarly, those affected by conditions such as Spasmodic Dysphonia or Recurrent Respiratory Papillomatosis find that whispering allows them to speak more smoothly and fluently. By converting whispered speech into clear, audible speech, this technology significantly enhances communication capabilities and improves the quality of life for a previously underserved group, offering them a more seamless and effective way to interact with the world around them.

Figure 3. Whispp is highlighted by NHK Japan, raising awareness about Assistive Voice Technology on Japanese national tv.

How to advance assistive technologies

Collaborating with renowned institutions like the Netherlands Cancer Institute and participating in initiatives such as the Dutch Ministry of Social Affairs and Employment’s ‘Technology for Inclusion’ pilot can significantly expand the reach and effectiveness of assistive technologies.

Figure 4. Whispp user Ruud interviewed by the Dutch Ministry of Economic Affairs, to raise awareness about the potential of AI in the field of communication.

Our experiences have demonstrated that such partnerships not only propel technological advancements but also ensure that these innovations serve those who need them the most. Speaking at key conferences and engaging in impactful projects highlight how technological innovation can contribute to societal inclusion and enhance the quality of life for people with disabilities. These collaborations are essential for fostering both innovation and social change, driving progress in making technology accessible and beneficial to all.

Figure 5. Demonstrating the capabilities of Assistive Voice Technology to Microsoft’s CEO at CES2024.

We encourage fellow professionals and innovators in the field to join us in establishing new partnerships and discovering innovative solutions. By working together, we can propel technology forward and effect profound social change, striving towards a more inclusive world for everyone. This collaborative effort is essential for harnessing the full potential of technology to improve accessibility and quality of life for individuals with disabilities, ultimately creating a society that values and supports all its members.

Get in touch with Whispp.

You might also be interested in..

How European disability tech startups are leveraging AI, by TechCrunch

"How European disability tech startups are leveraing AI", by TechCrunch At Whispp, we are...

Whispp Revolutionizes Communication with Groundbreaking AI Technology, Forbes Reports

Whispp highlighted by Forbes Forbes spotlighted Whispp for its innovative voice-assisted AI...

Whispp interview by DOQ.nl

Whispp interviewed by DOQ.nl DOQ.nl is a vital hub where over 17,000 doctors, pharmacists, and...